It was time to do my quarterly disaster recovery drill, which involves bootstrapping my entire system from scratch using my scripts and backups. Having this opportunity I wanted to put some hard numbers to my previous observations regarding ext4 vs Btrfs performance on my T430 running Qubes OS R4.1.1.

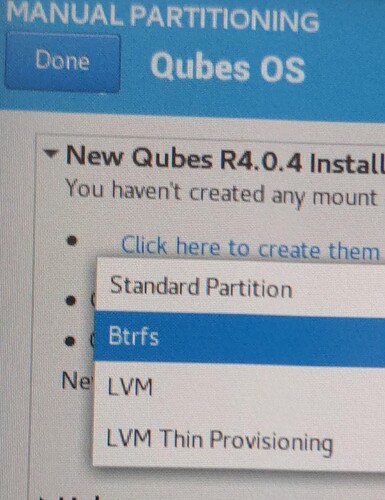

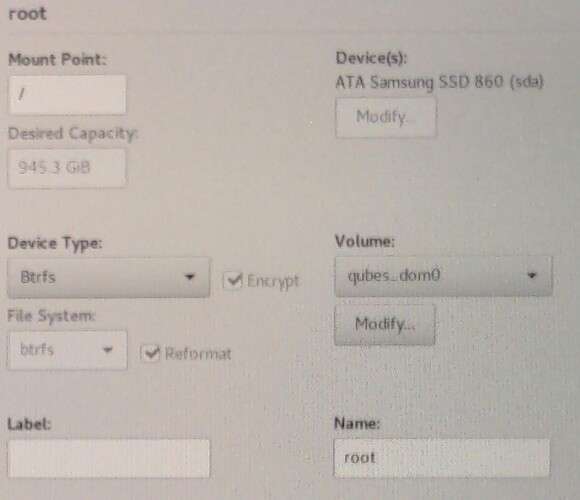

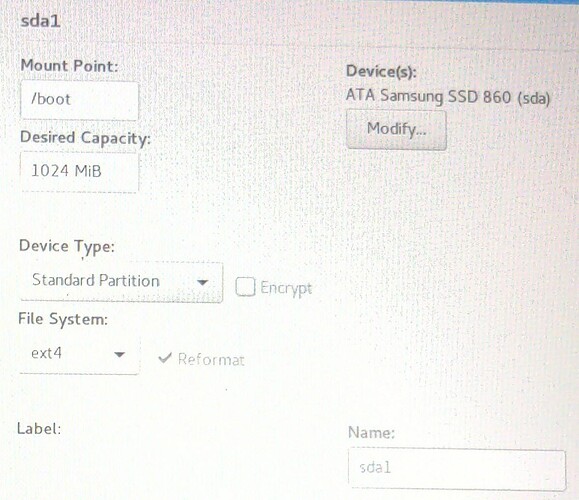

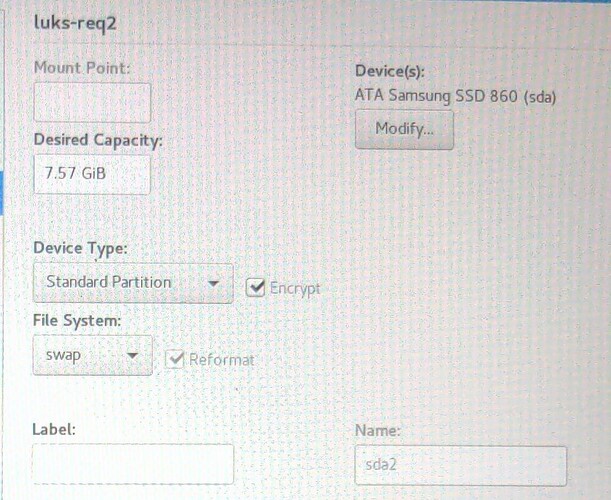

So I did two rounds: the first one I installed with ext4, restored everything, and executed @wind.gmbh’s qube start benchmark and @oijawyuh’s SSD write test, then I reinstalled/restored again but this time using Btrfs executing the same tests. Both times I made sure dom0 is fully up-to-date and at the same version as the previous test.

| Qubes release: | 4.1.1 (R4.1) |

| Brand/Model: | Lenovo ThinkPad T430 (23477C8) |

| BIOS: | CBET4000 Heads-v0.2.0-1246-g9df4e48 |

| Xen: | 4.14.5 |

| Kernel: | 5.15.63-1 |

| RAM: | 16340 Mb |

| CPU: | i7-3840QM CPU @ 2.80GHz |

| SCSI: | Samsung SSD 860 Rev: 2B6Q |

| HVM: | Active |

| I/O MMU: | Active |

| HAP/SLAT: | Yes |

| TPM: | Device present |

| Remapping: | yes |

So the following numbers are from a controlled environment, run on the same hardware with the same bits present. Nothing is different except the file system. I think it is justified to call the delta “significant”.

| start | write | |

|---|---|---|

| ext4 | 9.35 s | 466.8 MB/s |

| Btrfs | 7.74 s | 765.3 MB/s |

| Δ | 1.61 s (21%) | 298.5 MB/s (64%) |

actual measurements

ext4 start = 9.09, 9.44, 9.51, 9.64, 9.37, 9.24, 9.42, 9.14, 9.42, 9.25 s

ext4 write = 383, 424, 463, 467, 501, 446, 425, 522, 521, 516 MB/s

Btrfs start = 7.70, 7.73, 7.67, 7.68, 7.71, 7.58, 7.82, 7.83, 7.82, 7.83 s

Btrfs write = 760, 768, 773, 758, 768, 765, 750, 761, 780, 770 MB/s

Maybe others can do similar measurements when reinstalling and share them in this thread. I’d be very interested to see if these performance gains are general in nature or somehow specific to my hardware. If general in nature, maybe a case should be made to the development team to adopt Btrfs as a default.