@adw @unman

Conclusion is that update check is enabled by default but depends of either enable-proxy-setup / access to internet through netvm to download repository data. This means that the more app qubes are running, the more Packages files and repository data download is downloaded in parallel if the default tinyproxy is used instead of apt-cacher-ng properly configured to cache downloaded data and reused on each same parent TemplateVM to report available updates to dom0

We will take a corner case exemple, but you will get what I mean, and maybe it should be linked to UX @ninavizz. Let’s take the case of offline qubes, without any netvm connected (vault, and any other specialized templates created to do vault related, seperated unconnected appvms, for which software used there are expected to be updated for safety. We can then extend that to sys-net, sys-usb, sys-gui, sys-log, sys-xxx).

Updates checks depend on two possible qubes services, adressing different cases: app qubes having a netvm assigned (1) and app qubes not having netvm assigned (2 (and 3 required in case of cacher/apt-cacher-ng usage)) to have updates notifications appear on dom0 on a daily basis:

- To check updates availability for parents templates:

qubes-update-check

- This normally happens through qubes netvm for networked qubes.

- In case of apt-cacher-ng, update check will fail silently since URLs are modified. A qube won’t be able to talk to

http://HTTPS/// urls

- To be able to talk to update proxy (tinyproxy/apt-cacher-ng) and get packages update list even if no netvm:

updates-proxy-setup

- For

updates-proxy-setup to be accessible through appvms (vault/sys-usb etc), Qubes policies need to be modified so that any Qubes can talk through the update proxy. In my use case, so all app qubes can get updates notifications if updates-proxy-setup service is activated on a template-child qubes:

[user@dom0 ~]$ sudo cat /etc/qubes/policy.d/30-user.policy

qubes.UpdatesProxy * @anyvm @default allow target=cacher

#qubes.UpdatesProxy * @tag:whonix-updatevm @default allow target=sys-whonix

#qubes.UpdatesProxy * @type:AppVM @default allow target=cacher

#qubes.UpdatesProxy * @type:TemplateVM @default allow target=cacher

Notes:

For service qubes, updates-proxy-setup is expected to be activated on qubes to be able to talk through the update proxy. This applies to sys-usb/vault and any other user defined “air-gapped” qubes. As we know, tinyproxy is totally dumb here and will permit anything on app qubes to talk through tinyproxy to access the internet. For apt-cacher-ng, files patterns are defined and only URLs matching http://HTTPS///, defined under parent Templates, will be permitted to go through update proxy.

In my current testing:

- shaker/cacher is safer, but requires appvms to have

updates-proxy-setup and dom0 policy to be changed to have update notifications.

For UX:

- The Qubes update GUI should show last update timestamp (currently missing) so that users have an idea of when they last updated their templates.

- The Qubes update GUI should show last available updates input received so that users have an idea of when they last received an update notification.

This is such case where an update of the documentation alone leaves users vulnerable.

To diagnose status of current system:

- Obtain status of all non-template qube for

qubes-update-check service (see appvms that will check for updates and report to dom0):

qvm-ls --raw-data --fields NAME,CLASS | grep -v TemplateVM| awk -F "|" {'print $1'} | while read qube; do if [ -n "$qube" ];then echo "$qube:qubes-update-check"; qvm-service "$qube" "qubes-update-check";fi; done

1- Obtain status of all non-template qube for updates-proxy-setup (see appvms that will

download/report updates through defined update proxy [important if using cacher otherwise app qubes cannot talk to repositories]):

qvm-ls --raw-data --fields NAME,CLASS | grep -v TemplateVM| awk -F "|" {'print $1'} | while read qube; do if [ -n "$qube" ];then echo "$qube:updates-proxy-setup"; qvm-service "$qube" "updates-proxy-setup";fi; done

In the case of offline qubes based on specialized templates, users that have apt-cacher-ng might want to activate updates-proxy-setup service on their qubes to be notified on available updates. The offline qube will be able to talk to defined in Templates repositories through defined http://HTTPS/// urls and report updates availability to dom0. Otherwise, they won’t be able to report updates notifications to dom0. Also note that dom0 policy need to permit @anyvm to be able to talk to the proxy, otherwise resulting in RPC errors notifications in dom0

@unman : Let’s remember here that by using cacher, apt-cacher-ng manages its own cache expiration, caches packages list and provides such to anyone asking for packages updates to be pushed to dom0 as updte notification. So yes: the first app qube asking for a package list will actually have cacher download that package list. Other app qubes asking for that package list will download that list from cacher, not the internet: so no additional traffic is being generated. Basically, any app qube depending on a specialized Template will of course have additional repository information being downloaded through cacher; but again that package list will be downloaded only once for each specialized template. After all, this is an expected advantage of cacher from users: download once for all specialized templates.

Here again please correct me here:

EDIT: qubes-update-check is effectively activated by default, but requires internet access or update-proxy-setup to be activated in app qubes to use the update proxy (important to reduce throughput of multiple app qubes checking for updates of the same Template theough cacher).

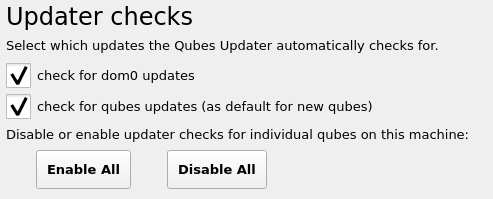

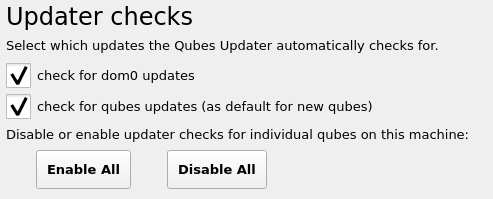

- Default qubes installation has

qubes-update-check enabled by default for all qubes. Defaults also activates the service by default under Qubes Global Setting:

@adw: this might mean (please confirm) that package lists are downloaded, and redownloaded (since not cached by tinyproxy) by all qubes through the update proxy, even for vault non-connected app qube. The use of cacher reduces the bandwidth needed here, with or without specialized templates usage.

EDIT: The following was false and was hidden:

Summary

Consequently and important to know: vault is actually a source of dom0 update notification, since it refreshes packages list through tinyproxy on default installation, as any other supposedly "air-gapped qubes"

EDIT: true: vault and air-gapped machines, having no netvm, would depend on updates-proxy-setup service to be enabled, which is not enabled by default.