Cleaning cache instantly created the issue:

[user@fedora-36-current ~]$ sudo dnf clean all

24 files removed

[user@fedora-36-current ~]$ sudo dnf update

Fedora 36 - x86_64 2.2 MB/s | 81 MB 00:36

Fedora 36 openh264 (From Cisco) - x86_64 0.0 B/s | 0 B 00:00

Errors during downloading metadata for repository 'fedora-cisco-openh264':

- Curl error (56): Failure when receiving data from the peer for https://codecs.fedoraproject.org/openh264/36/x86_64/os/repodata/repomd.xml [Received HTTP code 403 from proxy after CONNECT]

Error: Failed to download metadata for repo 'fedora-cisco-openh264': Cannot download repomd.xml: Cannot download repodata/repomd.xml: All mirrors were tried

Fedora 36 - x86_64 - Updates 163 B/s | 8.1 kB 00:50

Errors during downloading metadata for repository 'updates':

- Curl error (28): Timeout was reached for http://ohioix.mm.fcix.net/fedora/linux/updates/36/Everything/x86_64/repodata/repomd.xml [Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds]

- Downloading successful, but checksum doesn't match. Calculated: 480fdb1f22d8831cfac19f1efe3005eccbfbbf79a4d4d3cc3bd238345d6418abc6022157ba74d07f7b0cd219bbe47cc2f081f65221b76fcfedcd99a52948572d(sha512) 480fdb1f22d8831cfac19f1efe3005eccbfbbf79a4d4d3cc3bd238345d6418abc6022157ba74d07f7b0cd219bbe47cc2f081f65221b76fcfedcd99a52948572d(sha512) 480fdb1f22d8831cfac19f1efe3005eccbfbbf79a4d4d3cc3bd238345d6418abc6022157ba74d07f7b0cd219bbe47cc2f081f65221b76fcfedcd99a52948572d(sha512) Expected: 51e6aae77c3e848e650080c794985684750e4db9d3b11e586682d14d7cc94fa9f8b37a7a19a53b8251fdced968f8b806e3a7b1a9ed851c0c8ff4e1f6fb17c68f(sha512) 986e21b3c06994ab512b3f576610ebeff0492c46f15f4124c7ed2ed6f3745c2ad2f9894d06e00849f7465cb336b1f01764c31e3002c9288b1a9525253d3bf3af(sha512) 608ef25404a5194cf1a2f95fd74a4dafd5f3ad69755ddab21ffefe00bcaf432df803fa477c2bf323247f96abe7b71a778314b2aee8aec537e01756a9a274d26f(sha512)

- Downloading successful, but checksum doesn't match. Calculated: ffa3d254134aa8811d80816fc33bbb9ea89e531794773457c0cd0b7a28f67bde1d86208c94408051f6c157e80737c337e06bc320a1401cb0df74542f472b4e3b(sha512) ffa3d254134aa8811d80816fc33bbb9ea89e531794773457c0cd0b7a28f67bde1d86208c94408051f6c157e80737c337e06bc320a1401cb0df74542f472b4e3b(sha512) ffa3d254134aa8811d80816fc33bbb9ea89e531794773457c0cd0b7a28f67bde1d86208c94408051f6c157e80737c337e06bc320a1401cb0df74542f472b4e3b(sha512) Expected: 51e6aae77c3e848e650080c794985684750e4db9d3b11e586682d14d7cc94fa9f8b37a7a19a53b8251fdced968f8b806e3a7b1a9ed851c0c8ff4e1f6fb17c68f(sha512) 986e21b3c06994ab512b3f576610ebeff0492c46f15f4124c7ed2ed6f3745c2ad2f9894d06e00849f7465cb336b1f01764c31e3002c9288b1a9525253d3bf3af(sha512) 608ef25404a5194cf1a2f95fd74a4dafd5f3ad69755ddab21ffefe00bcaf432df803fa477c2bf323247f96abe7b71a778314b2aee8aec537e01756a9a274d26f(sha512)

- Downloading successful, but checksum doesn't match. Calculated: 63dcd7af35db555497c38e9e25693dcc3873b38994906de1271fdf17354b4de0dedd68b5cddef499bd3da5d8fb6f607cc4808d5cf1849241228c1568d36e8778(sha512) 63dcd7af35db555497c38e9e25693dcc3873b38994906de1271fdf17354b4de0dedd68b5cddef499bd3da5d8fb6f607cc4808d5cf1849241228c1568d36e8778(sha512) 63dcd7af35db555497c38e9e25693dcc3873b38994906de1271fdf17354b4de0dedd68b5cddef499bd3da5d8fb6f607cc4808d5cf1849241228c1568d36e8778(sha512) Expected: 51e6aae77c3e848e650080c794985684750e4db9d3b11e586682d14d7cc94fa9f8b37a7a19a53b8251fdced968f8b806e3a7b1a9ed851c0c8ff4e1f6fb17c68f(sha512) 986e21b3c06994ab512b3f576610ebeff0492c46f15f4124c7ed2ed6f3745c2ad2f9894d06e00849f7465cb336b1f01764c31e3002c9288b1a9525253d3bf3af(sha512) 608ef25404a5194cf1a2f95fd74a4dafd5f3ad69755ddab21ffefe00bcaf432df803fa477c2bf323247f96abe7b71a778314b2aee8aec537e01756a9a274d26f(sha512)

Error: Failed to download metadata for repo 'updates': Cannot download repomd.xml: Cannot download repodata/repomd.xml: All mirrors were tried

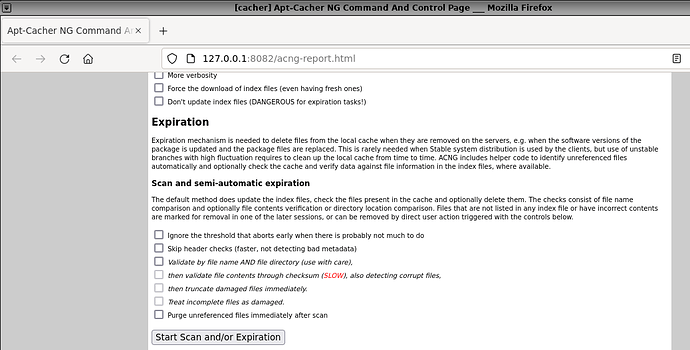

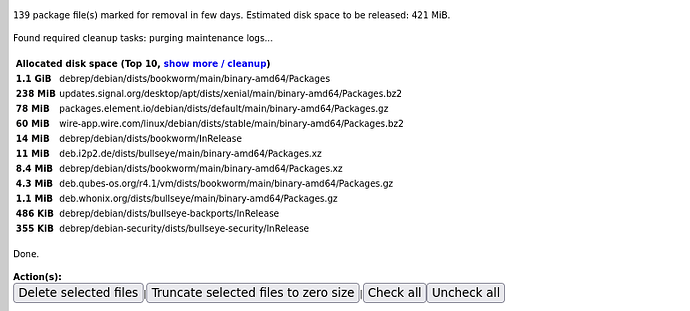

Doing scan and/or Expiration shows some zck being automatically tagged for removal.

Selecting delete selected files:

And then delete now.

Going back to Fedora-36 Template:

[user@fedora-36-current ~]$ sudo dnf update

Fedora 36 - x86_64 15 MB/s | 81 MB 00:05

Fedora 36 openh264 (From Cisco) - x86_64 0.0 B/s | 0 B 00:01

Errors during downloading metadata for repository 'fedora-cisco-openh264':

- Curl error (56): Failure when receiving data from the peer for https://codecs.fedoraproject.org/openh264/36/x86_64/os/repodata/repomd.xml [Received HTTP code 403 from proxy after CONNECT]

Error: Failed to download metadata for repo 'fedora-cisco-openh264': Cannot download repomd.xml: Cannot download repodata/repomd.xml: All mirrors were tried

Fedora 36 - x86_64 - Updates 2.2 MB/s | 30 MB 00:13

Qubes OS Repository for VM (updates) 80 kB/s | 159 kB 00:02

Ignoring repositories: fedora-cisco-openh264

Last metadata expiration check: 0:00:01 ago on Mon Nov 7 13:02:49 2022.

Dependencies resolved.

========================================================================================================================================================================

Package Architecture Version Repository Size

========================================================================================================================================================================

Upgrading:

exfatprogs x86_64 1.2.0-1.fc36 updates 86 k

firefox x86_64 106.0.4-1.fc36 updates 109 M

ghostscript x86_64 9.56.1-5.fc36 updates 37 k

ghostscript-tools-fonts x86_64 9.56.1-5.fc36 updates 12 k

ghostscript-tools-printing x86_64 9.56.1-5.fc36 updates 12 k

gtk4 x86_64 4.6.8-1.fc36 updates 4.8 M

java-17-openjdk-headless x86_64 1:17.0.5.0.8-2.fc36 updates 40 M

keepassxc x86_64 2.7.4-1.fc36 updates 7.5 M

libfido2 x86_64 1.10.0-4.fc36 updates 94 k

libgs x86_64 9.56.1-5.fc36 updates 3.5 M

mpg123-libs x86_64 1.31.1-1.fc36 updates 341 k

mtools x86_64 4.0.42-1.fc36 updates 211 k

osinfo-db noarch 20221018-1.fc36 updates 268 k

python-srpm-macros noarch 3.10-20.fc36 updates 23 k

thunderbird x86_64 102.4.1-1.fc36 updates 101 M

thunderbird-librnp-rnp x86_64 102.4.1-1.fc36 updates 1.2 M

tzdata noarch 2022f-1.fc36 updates 427 k

tzdata-java noarch 2022f-1.fc36 updates 149 k

vim-common x86_64 2:9.0.828-1.fc36 updates 7.2 M

vim-data noarch 2:9.0.828-1.fc36 updates 24 k

vim-enhanced x86_64 2:9.0.828-1.fc36 updates 2.0 M

vim-filesystem noarch 2:9.0.828-1.fc36 updates 19 k

Transaction Summary

========================================================================================================================================================================

Upgrade 22 Packages

Total download size: 277 M

Is this ok [y/N]:

@unman: from above test and report, we can see that cacher is actually getting in the way of getting actual updates without manual action.

Having Fedora-36 manually call sudo dnf update without cleaning local Template’s cache and then cacher’s cache reported no updates available, which was a false negative.

Cleaning Fedora-36 cache caused zck mismatch error, which required to clean cacher’s cache.

What can be done to have Fedora templates correctly getting available updates under cacher’s apt-cacher-ng configuration non-applied tweaks?