I have a little project to automate some stuff. I would love to get your opinion on that.

Automate administration of remote administration (in QubesOS)

So remote administration. It’s nice. ssh keys, authenticated onion services, split-ssh, dispvms.

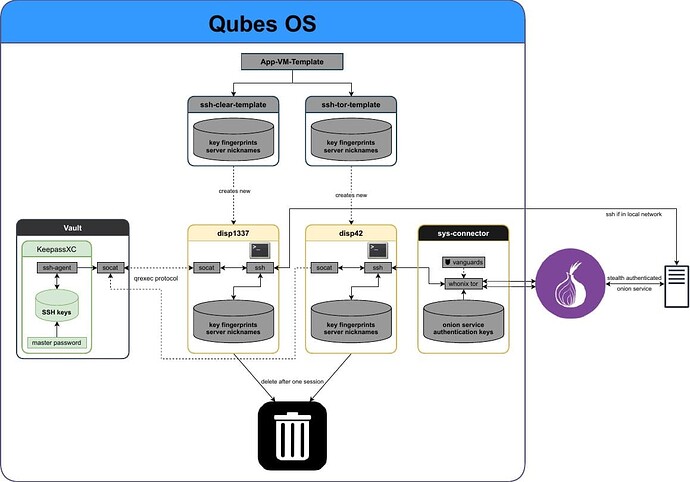

This is what it looks like:

Basically a split-ssh system using dispvms and authenticated onion services,

and another qube that is used for clearnet administration (ignore it, it

is not important right now)

Them process

So but how does one add a server into all that to make it chooch?

5 qubes are involved in my optimized process (my real life one is even more complicated and annoying lol):

- [vanity] creates the vanity adress

- [vault] has the password manager

- [sys-connector] Whonix-gw with the auth keys

- [tor-ssh-dvm] the ssh dvm

- [tor-ssh-dvm-template] the ssh dvm template

- [server] not a qube, but i will call it one for simplicity

But adding a new server is annoying. One has to do:

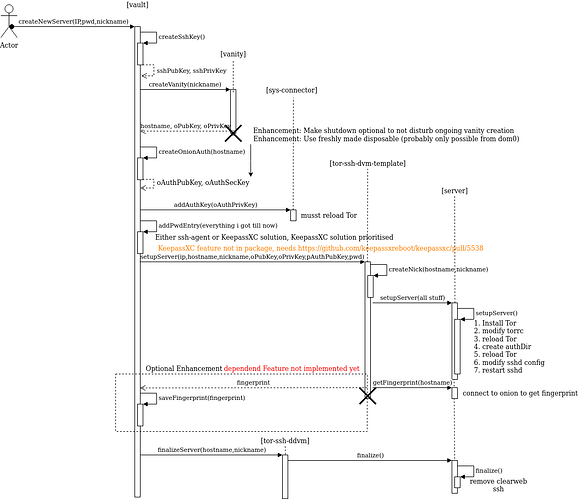

- [vault] Create ssh keys

- [vanity] Create vanity adress for server

- [vault] Create authentication key for hidden service

- [vault] Create entry in password manager

- [sys-connector] Add authentication key to sys-connector and reload

- [vault] Add ssh key to password manager

- [tor-ssh-dvm-template] Connect via tor-ssh-dvm to server (IP)

- [server] Install tor on server

- [server] Setup tor onion service on server and start/enable it

- [server] Set up sshd to use also listen on the onion service

- [tor-ssh-dvm-template] Create nickname on dvm-template for new server

- [tor-ssh-dvm-template] Connect via dvm-template (to add the ssh fingerprint and see if it works)

- [server] Configure server to only listen on onion service

99% of this process is anoying shit i don’t want to deal with.

I want to grab 50 VPS boxes, click “go” somewhere and stuff happens until i got my ssh set up for all of them, not waste a week of manual work on this.

So lets do it! This is what my plan looks like.

Why did you…

Why vanity addresses?

Because i am dope. I need to create the onion address on the administrating machine anyways as i need the address for the key. I could read it from the server after installing Tor, but this makes stuff more complicated.

Why an additional vanity qube?

Because i have one with the max number of CPU cores anyways because fast as fuck boiii. Qubes are cheap! Could do it in the vault qube, but then this qube would have n-1 cpu cores idling 99.9% of the time, or would be painfully slow 0.1% of the time. Also there would be more code in the vault that can explode. The other option would be to use a dispvm that is created, mkp244o installed and then starts the brute forcing. I don’t think this is possible if the initiating qube is not dom0. If there is a way: Please let me know!

Why an additional whonix-gw?

Mitigate the possibility of auth keys leaking in case there is an exploit for

Whonix-gw. This gw is only used to connect to my own services with authentication.

Why the tor-ssh-dvm?

If my ssh machine gets pwned, my servers are pwned. To minimize potential lateral

movement dispvms are the way to go for this.

Why is the vault calling everything?

The chain of commands should go from trusted → untrusted to minimize

lateral movement. While i do not prefer vault to do this from a usability

perspective, i think it is the safest option to do it that way. I also do not want to use dom0 because. More on that later.

Your returns go from untrusted → trusted, no good

Yeah. Not ideal. To be honest: I don’t think it is a big problem. Should the tor-ssh-dvm-template be malicious i am fucked anyways. The rest is not network facing and the stuff that i get in return would have been put into the KeePassXC anyways. If you have another opinion or some improvements, please let me know.

Why is the arrow going out of the actors abdomen?

Im funny. hihihi

How?

Dunno. I think of some python and/or bash scripts on the qubes that are called with qvm-run. The return values would be transferred via qvm-copy back to the vault. Vanity addresses with mkp224o. KeePassXC is a bit more complicated, as there is no functionality to add ssh-keys pro grammatically (yet). This will change when this will get merged. Should be in the testing build in a few weeks. Until then one has to compile KeepassXC. The Optional feature to safe the fingerprint in the description is also not implemented. Alternatively one can use the split-ssh solution without KeepassXC in the Qubes Github.

Known risks

ssh will offer the servers all keys (in deterministic order!) of all servers until one matches. This way if one server gets pwned the adversary learns how many servers i administrate. Not really that critical if you ask me. Also you have to increase the auth retries. One can fix this by fixing ssh-agent or hofix it by running multiple ssh-agents. Maybe sometime i will fix this but for now im ok with it.

A motivated network side adversary could by this:

- enumerate number of servers (based on traffic amount and pattern)

- detect when new servers are added (traffic amount and pattern)

- detect what server i am connecting to

As mitigations i would add decoy keys.

I have not found a way to shuffle the order of offering, but i have not bothered much at this stage.

Alternatively one could create an ssh-agent service for every server but when i think of administrating many servers this might not scale too well.

Question

Is this a good idea to begin with? I see people usually do such stuff from dom0 as it could for example create a dispvm and set it up for the mkp244o vanity onion creation or could more easily transfer files between qubes (for example with this “hack”). But: I like zsh and don’t want to fuck up my dom0. For example i would give my script ip and password of the servers and i really **do not want to type a 50ish character long password into dom0, but want to copy it to where it is needed.

I had no luck with trying to transfer files from qubeA to qubeB without a dialog box. What i tired so far:

In /etc/qubes-rpc/policy/qubes.FileCopy

qubeA qubeB allow

qubeA @anyvm allow

qubeA qubeB ask

qubeA qubeB allow, default_targer=qubeB

And a few others.

Also for the later used dispvm’s that ssh into the server these would need to have permissions to use the split-ssh. Is there a way to allow split-ssh for all dispvms of a specific template? I tried some configurations but did not have much luck.