How to install this via salt ?!

Partway there:

(It doesn’t update arkenfox daily, but it does combine it with the split browser.)

There isn’t a salt package to set this up currently.

It’s on my list.

i installed this task manager yesterday and got 2 questions:

i installed pi-hole, it seems like it setted everything up, it switched all my qubes to pi-hole.

i still got internet, so everything is fine but, is there a way to reach the pihole webui, to check if pihole working really?

i was wanted to lookup netstat in pihole to check on which port it is running but seems like network tools arent installed here.

second thing is, i also installed split-ssh, but i cant see a qube here.

if i want to try install it again, it says, its already installed.

It’s available at standard address - http://127.0.0.0 or via pihole -a

If you ran qubes-task or qubes-task-gui from terminal you would have

seen any feedback there. Was the installation succesfull?

Try removing with sudo dnf remove 3isec-qubes-sys-ssh-agent and

reinstalling

oh, yeah, in the pihole qube inside it would work if i write the ip/admin

no, sadly no success feedback because my laptop freezed while installing (because i got too much qubes and windows running probably and didnt realized, that it would also configure everything for me - thought have to do the networking stuff afterwards on myself)

but would try your command! thanks! <3 ![]()

Yep, its still happening and im now pretty aure it wasnt pihole which freezed my qubes

I tried to remove cacher since im unsure if this is related woth my parrot template (maybe it didnt installed well)

So i was wanted to remove cacher and reinstall ist

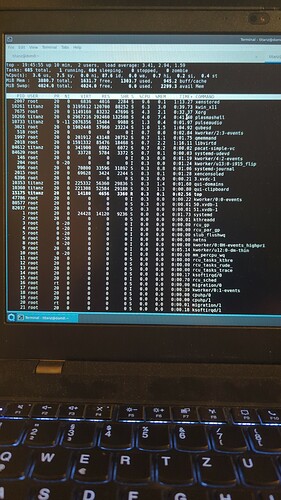

Qubes is still freezing at uninstalling it, i had left top opened so im able to see if cpu is at 100% usage because fans spinning 100%

But nothing memorable happening here

I get the lighttpd placeholder page when I try to access the pihole WebUI. CLI tools work, and filtering does too, just not the WebUI.

Not sure if it’s a problem with the installation process or the current version of pihole, or something else entirely.

@dipole you have to add a /admin to your ip adress to reach the pi-hole dashboard, first i also thought there is anything wrong.

anyhow, i took today some time to check unmans templates.

setting up split-gpg was ez as … and its working.

now im messing around with split-ssh.

first, i didnt noticed theres a github page for the readme, would link it for everyone else here:

this helped me a lot to get further

some things were unclear to set it up, like, from where are we getting the SshAgent-policy.

i just got it from the linked repo in unmans repo, but im pretty unsure if we need it still?

then the “edit the contens of client” confused me also, till i noticed i could find the client file above, anyhow, everything is working.

im just getting one issue and if someone could help me i would love the person because im messing around now since ~4h?

in the split-ssh appvm we got a work-agent.sh where the content looks like this:

#!/usr/bin/bash

export SSH_AUTH_SOCK=/run/user/1000/ssh-work.socket

ssh-add keys/work/id_rsa

everything pretty ez to understand i guess? if this script gets called it sets the SSH_AUTH_SOCK variable to the socket path?

and then its just adding the rsa key.

if i run this script i would receive the key in my work appvm.

but how am i able to run this script at startup and do i want to do that? what if i run multiple ssh agents? how am i able to decide which one is getting which one?

guess for that i just have to copy the work-service and edit the work-socket to my liking. and then edit the 30-user policy in dom0?

anyhow i’ll figure this out.

my main problem is, how im able that this script get run automatically? i already tried to add a second ExecStart to the work-service but since the type=forking im not able to add a second execstart and think, if unman would want us to add a second execstart he wouldnt choose forking,

then i tried to write in my /rw/conf/rc.local something like that:

/bin/bash /home/user/work-agent.sh

but this is also not working for me

i also tried to link the work work-agent.sh to /etc/init.d/ but also didnt helped

guess if i got this, i would do a small writeup how to use split-ssh, this could make my life sooo much easier ![]()

big thanks to everyone till now and especially to unman for this user friendly templates / appvms. /non ironic

EDIT:

yep, its just copying the old service as a new, enable it with systemctl --user enable

and if you dont want, that every qube is able to reach ssh keys, this helped me a lot:

would be still glad if someone is able to help me out how to start these *-agent scrips which are coming from default.

EDIT the 2nd:

i see some problems with this coming.

if i run test-agent which handles 1 ssh-key and then coding-agent, the keys are getting summerized, so if i run ssh-add -L i see both keys idk if this is our wished behavior?

idk how gpg script is working, but i wished something similiar in ssh-agent - like passthrough the keys for 5 minutes and then empty the ssh keys.

next thing i would wish here would be, if i run ssh to my server, it should check from which appvm its coming and run the correct script i.e if appvm runs git or ssh, it should add both keys, after ~5 minutes it should get wiped again

if i then run ssh in a different qube same behavior, just with different keys.

guess its also meant to run like this but anywhere is maybe a bug?

3rd EDIT and im sorry about that, but i tried to get things working till now…:

im just not able to use the sys-gpg.

see below:

┌─[user@coding] - [~/gpg-test] - [2023-03-16 04:47:28]

└─[1] <> ls

gpg-test.txt

┌─[user@coding] - [~/gpg-test] - [2023-03-16 04:47:51]

└─[0] <> qubes-gpg-client --sign gpg-test.txt

gpg: signing failed: Inappropriate ioctl for device

�����������

�

����R�&�f����+���pgpg: signing failed: Inappropriate ioctl for device

┌─[user@coding] - [~/gpg-test] - [2023-03-16 04:48:13]

└─[2] <>

anyone got ideas why im expecting this errors?

these commands are working in sys-gpg, so it must be an error betwen sys-gpg and my appvms (also tried different appvms)

i also tried the first google solution to export GPG tty

If you go to http://localhost you will see a handy link to the admin

panel

I dont understand what this means.

If you install the split-ssh package then a starter policy is created

for you in /etc/qubes/policy/30-user.policy

This will depend on whether you password protect your ssh keys, and

whether you want to set validity periods per key.

If you do want to start a service automatically and add a key, you can use systemd -

it’s already been covered in this thread.

[Unit]

Description=SSH agent

[Service]

Type=oneshot

Environment=SSH_AUTH_SOCK=%t/ssh-agent.socket

ExecStart=/usr/bin/ssh-agent -D -a $SSH_AUTH_SOCK

ExecStartPost=/usr/bin/ssh-add [key]

[Install]

WantedBy=default.target

The point in running multiple agents is that you can allocate keys as

you will -

work: keyA,keyB,keyC

projectA: keyA

SG: keyB,keyC

There’s a simple utility to set up new agents -

/home/user/Configure-new-ssh-agent.sh

You edit the 30-user policy so that each agent use is controlled,

adapting this:

qubes.SshAgent +AGENT @anyvm @anyvm ask default_target=sys-ssh-agent

where AGENT is the name of the ssh-agent you want to use (this is passed

through as parameter) - this gives you fine grained access control from

qubes to agents, and therefore qubes to ssh-keys.

You can also clone sys-ssh-agent if you will, storing different keys on

different backends, rather than having them all on one qube.

hey,

thanks for the writeup, all things you wrote here, i got working yesterday.

my issues are, i’ve setup my client like this:

socat UNIX-LISTEN:$SSH_AUTH_SOCK,unlink-early,reuseaddr,fork EXEC:"qrexec-client-vm @dispvm qubes.SshAgent+coding" &

and my work appvm like this:

socat UNIX-LISTEN:$SSH_AUTH_SOCK,unlink-early,reuseaddr,fork EXEC:"qrexec-client-vm @dispvm qubes.SshAgent+work" &

now the problem is, if sys-ssh-agent isnt running and im doing something like ssh conorz@some-ip -p 1234 in the coding appvm

it doesnt got any keys.

i have to open the terminal and run the coding-agent script.

and if i do ssh-add -L in work i would see the keys for the coding appvm.

is there a way, to completely seperate this key if a script already run?

like adding something to the coding-agent script like:

$SSH_KEYS=“”

but as i said, before i would do this, the script have to run for the correct agent automatically.

and about the gpg problem, you didnt got an idea? i just tried to run qubes-gpg-client --sign test.xt today again and still getting the ioctl error sadly.

but the weird part is, if im running qubes-gpg-client -K im able to see all keys.

gpg --sign test.txt is working in sys-gpg itself.

EDIT:

found that:

The Split GPG client will fail to sign or encrypt if the private key in the GnuPG backend is protected by a passphrase. It will give an Inappropriate ioctl for device error.

now im getting something like this:

┌─[user@coding] - [~/gpg-test] - [2023-03-16 12:55:26]

└─[0] <> qubes-gpg-client --sign something.txt

�w���

��0bd�hello world, i hope this is gonna working

�3

�d��ZW�_����>��:�@h

����4e��bi)r�c��Ua�Z�72;nt�d{��D���

�B�g%���u��TC�n�"�ߋ^��

p��`¼��f���>��G�

�s�o�� ��:%�7���Y�����A7MjPp�6���%

(��&�*�.�/N;��u

�~~�m���>���t

O����x����1[U"g���E������B��y��&�����7���?��B��\�!5#�!��p�-��I�L�g�8�k�/��Dc�q�UBD�XT�6&CҨ�g���Х�8aş��K�n[\P�*�&�%ʖ�G=�x���K�C��U�v����,�r�-�������*D��B��È�22�&���4����O�Ysޚҝw?�$���_i]oiO���5�z�Gr���7��v�o��{��$>�e����A�+q �F��^<��<z+0q���mG�c�o_�Duc�% ┌─[user@coding] - [~/gpg-test] - [2023-03-16 12:55:37]

└─[0] <> ls

something.txt

┌─[user@coding] - [~/gpg-test] - [2023-03-16 12:55:39]

└─[0] <>

the only thing, as you can see is, if i want to sign, it doesnt create a something.txt.gpg

small update on this:

i was searching for the --clearsign option of gpg. that were my “issue”.

but i still didnt found a solution for my ssh problem, so my plan is, if im sshing my server, the sys-ssh-agent should run the correct qubes-agent.sh so that i dont need to run it manually.

is this possible?

or do i have to create a service which is running the correct script and create for every qube the correct ssh-agent?

Many users protect their ssh keys with passwords, and ssh-agents

therefore require manual intervention.

I suppose you could modify SshAgent service to parse the input, check if

the relevant agent is running and start it if it is not, but that is

different from the current use case.

thanks for your reply.

agent is running automatically anyhow, just still didn’t got a plan how im able to run the correct agent script and delete the keys after i used them (from the keys-add).

maybe i will solve that by just cloning the vm.

so i’ll add the scripts to my service, so they would run if the vm is starting.

but thats not my first priority at the moment.

I dont understand youo

sorry, got night shifts since 2 weeks now and i got the feeling i burned out or something similiar, working on the easiest issues for over an hour atm.

my native language is pretty similiar to my english atm.

anyhow, i meant i have to figure out, how i’m able to trigger the coding-agent.sh if my coding vm is requesting the ssh keys.

how they get “deleted” i found this already out, i meant this command:

ssh-add -t <secs> ...

i also messed up my qubes policy now, i was wanted to change ask to allow because i have to rebase a git repo and i dont want to press ok 300 times.

if im changing to allow, the vm isn’t able to get the private key and it seems like as my qrexec would stop working because im also not anymore able to copy and paste from or to the public clipboard.

if im changing back everything to ask, everything will work again.

There is no facility to automatically add keys on demand.

You can simply add keys on start up of the service by editing the

relevant NAME-agent.sh script to include the keys you want.

If you want this you cant use keys with passwords or time limited keys,

both of which are good practice, but wont work with automation,

obviously.

This shows you have introduced a syntax error in the file.

I never presume to speak for the Qubes team. When I comment in the Forum or in the mailing lists I speak for myself.2 posts were split to a new topic: Simple tool set-up - monero wallet issues

Did the sys-vpn issue get put in a different thread? I was following that conversation while troubleshooting my issue, which is exactly what others were describing. I came to the conclusion it’s something with my cacher but I didn’t see a clear resolution. I’m doing a full set of updates by unchecking the box and telling it to look for updates because I think unman mentioned that at one point. If that doesn’t work I’m guessing I need to find out what failed in the install? The VM is kind of difficult to work on since it’s PW protected now and I can’t even get the gpk-update trick I found in a different thread.

I was looking through the page for sys-vpn on the github and am guessing going through each of those individually may work too? I’m still learning the whole salting concept but have worked with various scripting languages and can follow along enough to not be totally confused, but I definitely don’t have anywhere near an understanding as many in this thread.

I’d prefer to keep this thread for discussion of the tool, and to

separate out support issues.

Can you move this to a new thread in user Support with some details of your

problem? I understand you have a cacher, but you dont say what your

problem is.