This “guide” aims to explore and give a practical example of leveraging SaltStack to achieve the same goal as [NVIDIA GPU passthrough into Linux HVMs for CUDA applications](https://forum.qubes -os.org/t/nvidia-gpu-passthrough-into-linux-hvms-for-cuda-applications/9515/1). Salt is a management engine that simplifies configuration, and QubesOS has its own flavour. Want to see some?

This guide assumes that you’re done fiddling with your IOMMU groups and have modified grub parameters to allow passthrough.

In addition to that, if you haven’t set up salt environment yet, complete step 1.1 as described in this guide to get ready.

The basics

Before we even start doing anything, let’s discuss the basics. You probably already know that salt configurations are stored in /srv/user_salt/. Here’s how it may look:

.

├── nvidia-driver

│ ├── f41-disable-nouveau.sls

│ ├── f41.sls

│ └── default.jinja

├── test.sls

└── top.sls

Let’s start with the obvious. top.sls is a top file. It describes high state, which is really just a combination of conventional salt formulas. Stray piece of salt configuration can be referred to as formula, although I’ve seen this term used to refer to different things. test.sls is a state file. It contains a configuration written in yaml. nvidia-driver is also a state, although it is a directory. This is an alternative way to store state for situations when you want to have multiple state (or not only state) files. When a state directory is referenced, salt evaluates init.sls state file inside. State files may or may not be included from init.sls or other state files.

Since in this case different formulas are used depending on distribution, it doesn’t make much sense to have

init.sls. In this configuration, you can’t just call fornvidia-driver, and must specify distribution too:nvidia-driver.f41

Yaml configuration consists of states. In this context, state refers to a module - piece of code that most often does a pretty specific thing. In a configuration, states behave like commands or functions and methods of a programming language. At the same time, salt formulas are distinct from conventional programming languages in their order of execution. Unless you clearly define the order using arguments like require, require_in, and order, you should not expect states to execute in any particular sequence.

One thing to note here is that not all modules are state modules. There are a lot of them, and they can do various things, but here we only need the state kind.

In addition to state files, you notice default.jinja. Jinja is a templating engine. What it means is that it helps you to generalize your state files by adding variables, conditions and other cool features. You can easily recognize jinja by fancy brackets: {{ }}, {% %}, {# #}. This file in particular stores variable definitions and is used for configuration of the whole state tree (directory nvidia-folder).

Writing salt configuration

1. Create a standalone

First, let’s write a state to describe how vm shall be created:

nvidia-driver--create-qube:

qvm.vm:

- name: {{ nvd_f41['standalone']['name'] }}

- present:

- template: {{ nvd_f41['template']['name'] }}

- label: {{ nvd_f41['standalone']['label'] }}

- flags:

- standalone

- prefs:

- vcpus: {{ nvd_f41['standalone']['vcpus'] }}

- memory: {{ nvd_f41['standalone']['memory'] }}

- maxmem: 0

- pcidevs: {{ nvd_f41['devices'] }}

- virt_mode: hvm

- kernel:

- features:

- set:

- menu-items: qubes-run-terminal.desktop

Here, I use qubes-specific qvm.vm state module (which is a wrapper around other modules, like prefs, features, etc.). Almost all values and keys here are the same as you can set and get using qvm-prefs and qvm-features. For nvidia drivers to work, kernel must be provided by the qube - that’s why the field is empty. Similarly, to pass GPU we need to set virtualization mode to hvm and maxmem to 0 (it disables memory balancing).

nvidia-driver--create-qube is just a label. As long as you don’t cross the syntax writing it, it should be fine. Aside from referencing, plenty of modules can use it to simplify the syntax, and some need it to decide what to do, but you can look it up later if you want.

Now, to the jinja statements. Here, they provide values for keys like label, template, name, etc. Some of them are done this way (as opposed to writing a value by hand) because the value is repeated in the state file multiple times, others are to simplify the process of configuration. In this state file jinja variable is imported using the following snippet:

{% if nvd_f41 is not defined %}

{% from 'nvidia-driver/default.jinja' import nvd_f41 %}

{% endif %}

Jinja is very similar in its syntax to python. In this case variable from default.jinja gets imported only if it is not declared in the current context. It allows us to both call this formula as is (without any jinja context) and include it in other formulas (potentially with custom definition of nvd_f41). Note that you need to specify state directory when importing, and use actual path instead of dot notation.

Upon inspection of map.jinja, what we see is:

{% set nvd_f41 = {

'template':{'name':'fedora-41-xfce'},

'standalone':{

'name':'fedora-41-nvidia-cuda',

'label':'yellow',

'memory':'4000',

'vcpus':'4',

},

'devices':['01:00.0','01:00.1'],

'paths':{

'nvidia_conf':'usr/share/X11/xorg.conf.d/nvidia.conf',

'grub_conf':'/etc/default/grub',

'grub_out':'/boot/grub2/grub.cfg',

},

} %}

Here, I declare dictionary nvd_f41. It contains sub-dictionaries for template parameters, standalone qube parameters, list of pci devices to pass through, and another dictionary for paths. Since we need to pass all devices in the list to new qube, in the state file I reference whole list.

Jinja behavior differs depending on what delimiter is used. Code in double brackets (called expression) tells the parser to “print” the resulting value into state file before the show starts. Statements ({% %}) do logic. {# #} is a comment.

1.5 Interlude: what’s next?

Now, when we have a qube at the ready (you can check it by applying the formula), how do we install drivers? I want to discuss what’s going on next, because at the moment of writing (March 2025) driver installation processes for fedora 41 and debian 12 are different.

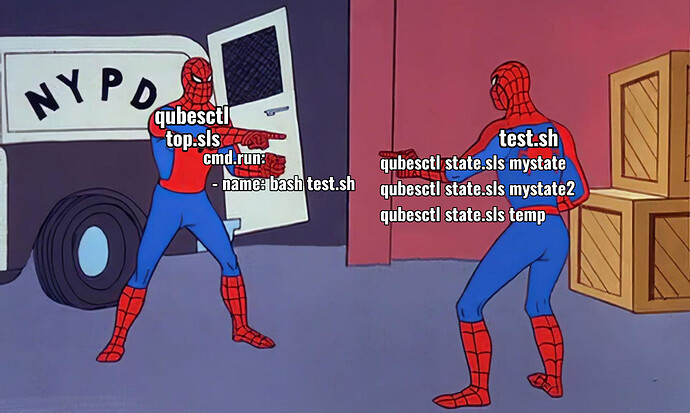

How do I apply a formula?

To apply a formula, put your state into in your salt environment folder together with jinja file and run

sudo qubesctl --show-output state.sls <name_of_your_state> saltenv=user

(substitute <name_of_your_state>)

Salt will apply the state to all targets. When not specified, dom0 is the only target. This is what we want here, because dom0 handles creation of qubes. Add --skip-dom0 if you want to skip dom0 and add --targets=<targets> to add something else.

The plan:

| # | fedora 41 | Debian 12 |

|---|---|---|

| 1. | Prepare the qube | Prepare the qube |

| 2. | Enable rpmfusion repositories | Add repository components |

| 3. | Grow /tmp/, because default 1G is too small to fit everything that driver building process spews out. According to my measurement, driver no longer needs more than 1G to build. I have decided to leave this step in just in case this problem still occurs with different hardware. |

- |

| 4. | Install drivers & wait for build | Update, upgrade, install drivers |

| 5. | Delete X config, because we don’t need it where we going |

- |

| 6. | optional : Disable nouveau, because nvidia install script may fail to convince the system that it should use nvidia driver. | - |

Please be aware that both debian and rpmfusion driver package names may be different depending on what graphics card you have. This guide uses most common modern package names, but you should check it for yourself. This guide also assumes that you are starting from default qubes templates - Debian installation process changes if dracut or SecureBoot are used by the system.

Debian has

nvidia-detectprogram to tell you which drivers you need. I should be able to parse it in a state to create a truly hardware-agnostic formula.

2-0.5. How to choose target inside the state file

Unless you are willing to write (and call for) multiple states to perform one operation, you might be wandering how to make salt apply only first state (qube creation) to dom0, and all others - to the nvidia qube. The answer is to use jinja:

{% if grains['id'] == 'dom0' %}

<!-- Dom0 stuff goes here -->

{% elif grains['id'] == nvd_f41['standalone']['name'] %}

<!-- nvd_f41['standalone']['name'] stuff goes here -->

{% endif %}

That way, state will be applied to all targets (dom0, prefs.standalone.name), but jinja will edit the state file appropriately for each of them.

2. Configure repositories

fedora 41

Pretty self-explanatory. {free,nonfree} is used to enable multiple repositories at once. It is a feature of the shell, not salt or jinja.

nvidia-driver--enable-repo:

cmd.run:

- name: dnf config-manager setopt rpmfusion-{free,nonfree}{,-updates}.enabled=1

debian 12

In order to configure Debian repository components, I use the pkgrepo state.

nvidia-driver--enable-repo:

pkgrepo.managed:

- name: deb https://deb.debian.org/debian bookworm main contrib non-free non-free-firmware

- file: /etc/apt/sources.list

3. Extend /tmp/

This lasts until reboot. As I already mentioned, you might not need this. On the other hand, it is non-persistent and generally harmless, so why not?

nvidia-driver--extend-tmp:

cmd.run:

- name: mount -o remount,size=2G /tmp/

4. Install drivers

Here, I use - require: parameter to wait for other states to apply before installing the drivers. Note that it needs both state (e.g. cmd) and label to function.

fedora 41

nvidia-driver--install:

pkg.installed:

- pkgs:

- akmod-nvidia

- xorg-x11-drv-nvidia-cuda

{# - vulkan #}

- require:

- pkgrepo: nvidia-driver--enable-repo

- cmd: nvidia-driver--extend-tmp

loop.until_no_eval:

- name: cmd.run

- expected: 'nvidia'

- period: 20

- timeout: 600

- args:

- modinfo -F name nvidia

- require:

- cmd: nvidia-driver--install

In case of fedora I also use loop.until_no_eval to wait until driver is done building. It runs the state specified by - name: until it returns stuff from - expected. Here it is set to try once in 20 seconds for 600 seconds. - args: describe what to pass to the state in the - name:

Essentially, it runs modinfo -F name nvidia, which translates into “What is the name of the module with the name ‘nvidia’?”. It just returns an error until module is present (i.e. done building), and then returns ‘nvidia’.

debian 12

nvidia-driver--install:

cmd.run:

- name: apt update -y && apt upgrade -y

- requires:

- pkgrepo: nvidia-driver--enable-repo

pkg.installed:

- names:

- linux-headers-amd64

- nvidia-driver

- firmware-misc-nonfree

- nvidia-open-kernel-dkms

- nvidia-cuda-dev

- nvidia-cuda-toolkit

{# comment `nvidia-open-kernel-dkms` out to go full proprietary #}

- requires:

- cmd: nvidia-driver--install

Technically, apt only needs to update metadata before installing, but I also run upgrade because the default debian template is pretty far behind.

5. Delete X config

nvidia-driver--remove-conf:

file.absent:

- name: {{ nvd_f41['paths']['nvidia_conf'] }}

- require:

- loop: nvidia-driver--assert-install

6. Disable nouveau

If you download state files, you will find it in a separate file. It is done so for two reasons:

- It may not be required

- I think vm must be restarted before this change is applied, so first run the main state and apply this after restarting the qube.

- Why? No idea.

- Why don’t just add reboot state into the state file before this? Because only dom0 can reboot qubes, dom0 states are always applied first, and there is no way I know of to make it run part of its state, wait until a condition is met, and continue, without multiple calls to qubesctl, unless…

{% if nvd_f41 is not defined %}

{% from 'nvidia-driver/default.jinja' import nvd_f41 %}

{% endif %}

{% if grains['id'] == nvd_f41['standalone']['name'] %}

nvidia-driver.disable-nouveau--blacklist-nouveau:

file.append:

- name: {{ nvd_f41['paths']['grub_conf'] }}

- text: 'GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX rd.driver.blacklist=nouveau"'

nvidia-driver.disable-nouveau--grub-mkconfig:

cmd.run:

- name: grub2-mkconfig -o {{ nvd_f41['paths']['grub_out'] }}

- require:

- file: nvidia-driver.disable-nouveau--blacklist-nouveau

{% endif %}

Make sure to change the paths if you’re not running fedora 41.

not the ways I know of:

- advanced : Check for conditions in grains and pillars, see this topic

- why? : also I don’t think it can work

Downloads

Current version

- github repo

- Direct download:

- d12.yaml (1.5 KB)

- default.yaml (650 Bytes)

- f41.yaml (1.6 KB)

- f41-disable-nouveau.yaml (567 Bytes)

Old version (fedora 40)

This formula is quite a lot different from the new one due to the changes in dnf, resolution of grubby-dummy dependency conflict, and updates to the way I use jinja. Here is the old procedure:

- Prepare qube

- Enable rpmfusion repository

- Grow

/tmp/, because default 1G is too small to fit everything that driver building process spews out. It will fail otherwise. - Delete

grubby-dummy, becase it confilcts withsdubby. Nvidia drivers depend on it. See this issue. - Install

akmod-nvidiaandxorg-x11-drv-nvidia-cuda - Wait for the driver to build

- Delete X config, because we don’t need it where we going

- optional : Disable nouveau, because nvidia install script may fail to convince the system that it should use nvidia driver.

Old state files. They’re kind of uggo, but I like them anyway:

disable-nouveau.yaml (486 Bytes)

init.yaml (2.2 KB)

map.yaml (468 Bytes)

Uploading salt is forbidden, therefore files are renamed into .yaml. For now, I only have state for fedora 40, but modifying it for debian or fedora without conflicting dependencies is trivial.

Contributions, improvements and fixes are welcome! I call it GPL-3 if you need that for some reason.