One of the reason why I try to make Proxmox works on QubesOS, is so I can create a cluster of Proxmox and can use different functionality that a cluster provide such as HA, migration, ceph, replication …

So more good new as :

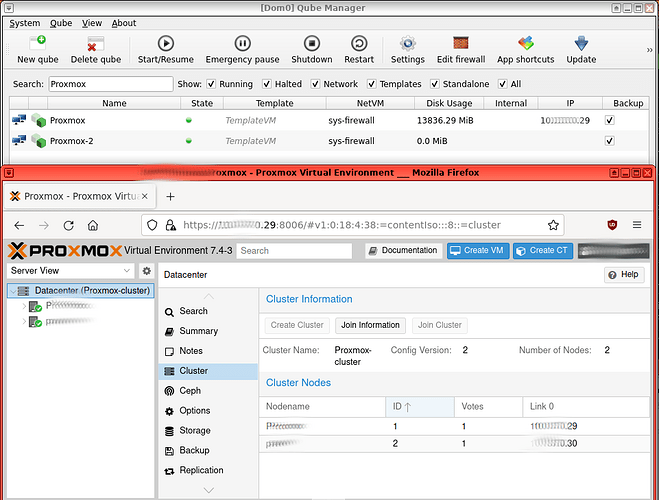

I created a second Proxmox called ProxmoxOnQubesOS-2

I can simultaneously run ProxmoxOnQubesOS and ProxmoxOnQubesOS-2 on QubeOS.

I was also able to make both on same communicate to each other so I could in Proxmox create a cluster

On sys-firewall :

sudo iptables -I FORWARD 4 -s 10.137.0.30 -d 10.137.0.29 -j ACCEPT

sudo iptables -I FORWARD 5 -s 10.137.0.29 -d 10.137.0.30 -j ACCEPT

In the screenshoot :

Proxmox = ProxmoxOnQubesOS

Proxmox-2 = ProxmoxOnQubesOS-2

As of now, only Alpine is working for me in proxmox, running alpine in both ProxmoxOnQubesOS and ProxmoxOnQubesOS-2 does work !

Both Proxmox with virtualization on can run simultaneously on QubesOS ![]()

Both Proxmox can run a VM (Alpine) simultaneously on QubesOS ![]()

Both Proxmox can both communicate between each other ![]()

Both Proxmox are in the same cluster ![]()

Need to try next :

Cepth

Migration

Replication

HA