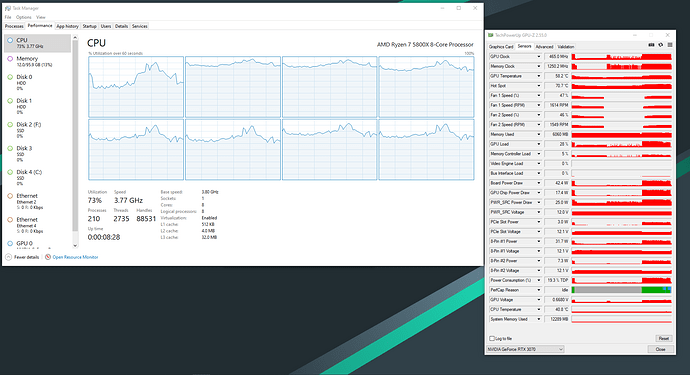

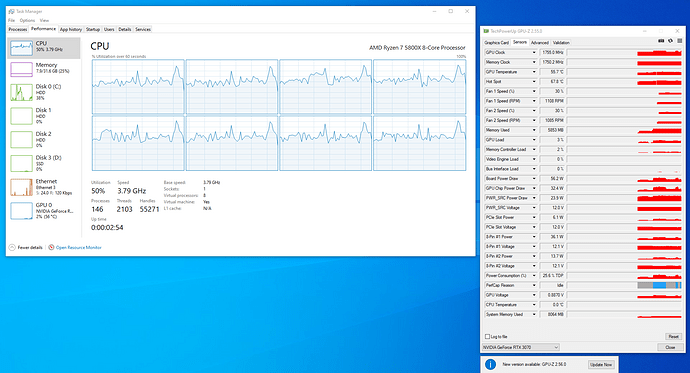

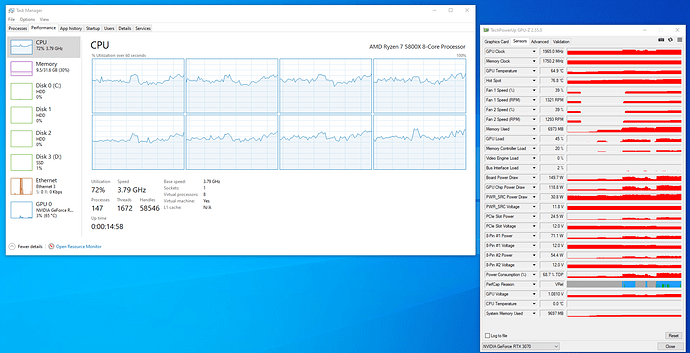

Just got a MASSIVE boost in my Windows HVM after messing with xen.xml, getting native frames in Cyberpunk, Starfield, and Hogwarts Legacy now and seeing much higher GPU usage. Here is my current XML.

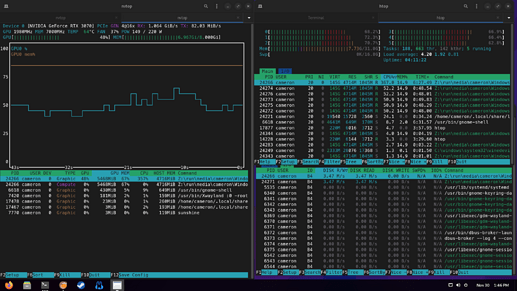

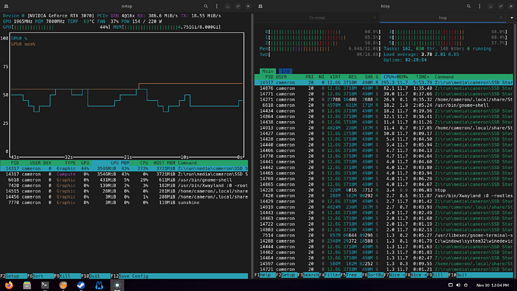

Going to do a little more testing to try to figure out exactly which option gave me the boost and if it also works under the Linux guests. I was honestly just shotgunning other peoples VFIO options and got lucky I think. It is odd to me that it performs well with <feature policy='disable' name='hypervisor'/> I had thought setting that flag would kill your performance.

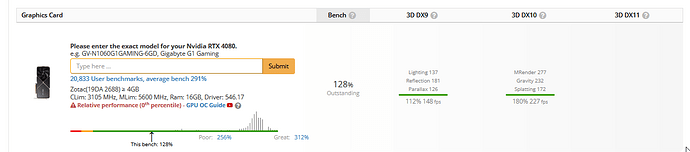

Slight exaggeration, not quite bare metal but I am getting 70FPS on the benchmark vs 80FPS on metal compared to the 35-40 before.

Think I got the settings somewhat nailed down for WINDOWS ONLY hopefully it works on yours if you still have Qubes. The following needed to be added to /usr/share/qubes/templates/libvirt/xen.xml (or however you manage your XMLs, virsh edit did not work for me).

{% if vm.virt_mode != 'pv' %}

<cpu mode='host-passthrough'>

<!-- Add this block -->

{% if vm.features.get('gaming_feature_policies', '0') == '1' -%}

<feature policy="require" name="invtsc"/>

<feature policy="disable" name="hypervisor"/>

{% endif -%}

After doing that, you can run qvm-features gpu-gaming-win10 gaming_feature_policies 1. Then when you start your HVM, check /etc/libvirt/libxl/gpu-gaming-win10.xml and make sure you see those options added to your HVMs XML.

<feature policy="disable" name="hypervisor"/> is actually KEY on my system. The hypervisor feature on its own sees no gain, and not having it at all doesn’t change anything, but the combination of the two works. Apparently invtsc and hypervisor together will help negate the performance impact from using the hypervisor flag. I am curious why I get abysmal performance both with and without the flag though.

I have tried this under Nobara Linux (KDE + Wayland) but didn’t see an improvement in Cyberpunk or Stray via Lutris with Proton GE, however, I do not know how well it runs under Lutris on bare metal so I might try Nobara on metal when I get my NVMe that was supposed to replace my dual boot one then start attacking that.

One thing that I will reiterate for anyone coming across this is that you definitely want to pass through an NVMe to keep your games on, the Disk I/O for Xen and Windows 10 is only slightly better than a 7200RPM drive when running the host on an NVMe and will cause massive stuttering in certain games.

Update: all of my games that I had on Win10 have been set up and are running well. The only games that I had to do anything with were ones that utilize Shader Precaching (Hogwarts Legacy, Uncharted 4) as the I/O on the Xen disk just isn’t good enough. Solution for me was to just Symlink a folder on my NVMe to ProgramData (e.x. mklink /J "C:\ProgramData\Hogwarts Legacy" "D:\Games\Symlinks\ProgramData\Hogwarts Legacy").

After symlinking to the NVMe they run very similar to metal if not the exact same. Without symlinking Uncharted had lots of stutter and audio stutter all the through the loading and into the game to where it was unplayable.

Better/more permanent solution is to install Windows onto the NVMe rather than using the Qubes provided disks, but I’m keeping it set up to be able to dual boot as a fallback for a little so will likely do that down the line.

Games I tested:

- System Shock Remake

- Cyberpunk 2077

- Hogwarts Legacy

- Left 4 Dead 2

- Lethal Company

- Outer Wilds

- Starfield

- Starship Troopers - Terran Command

- Stray

- Tales from the Borderlands

- Uncharted - Legacy of Thieves Collection

- Warhammer 40,000 Boltgun