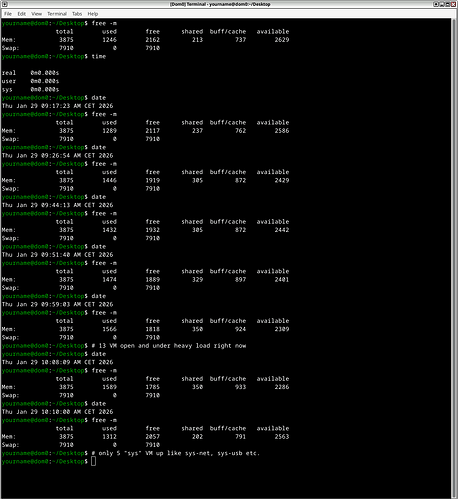

I’ve performed an in-place upgrade from 4.2 to 4.3 yesterday. Among multiple things that seem to stop working for multiple users (such as sys-usb, screensaver, crashing update tool, “sluggish” experience), it looks like the graphical environment in dom0 is eating RAM.

I use i3.

RAM relatively early on:

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 1043 2310 119 706 2836

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 1027 2325 113 700 2851

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 1081 2270 113 700 2797

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 1274 2074 165 756 2605

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 1107 2232 125 725 2772

Swap: 4015 0 4015

RAM after configuring VMs for a few hours:

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 2201 317 486 1907 1677

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 2194 325 486 1907 1685

Swap: 4015 0 4015

RAM 30 minutes later after doing more setup work:

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 2443 194 557 1898 1435

Swap: 4015 0 4015

[me@dom0 ~]$ free -m

total used free shared buff/cache available

Mem: 3879 2442 195 556 1897 1436

Swap: 4015 0 4015

Running top -c shows that it’s indeed /usr/bin/X using more RAM.

I wonder if reports of instability and crashes and the updater crash others appear to be experiencing aswell are related to this. Soon there will be no RAM to eat anymore.

I’m yet to really use the system, but if it continues like this, I must fear that something will crash soon, which is pretty problematic.

Update note:

I forgot to add, just two days ago, right before upgrading, I bumped my LUKS key to 2 GB RAM right from within dom0, there was at least 2.5 G or 3 G available on 4.2. I wasn’t monitoring it as 4.2 was working great for me, but it appears that this didn’t happen before.