No they don’t. The name of the video is “Tampering Detection” and never in the blog post they use prevention but evidence…

For the rest of your post, I will quickly address the points that don’t make sense to me again.

That is not what I said, and again you propose a really simplistic shortcut to prove a point. This is called straw man argument, unfortunately

A straw man fallacy is the informal fallacy of refuting an argument different from the one actually under discussion, while not recognizing or acknowledging the distinction. One who engages in this fallacy is said to be “attacking a straw man”. Wikipedia

You could not, under any supported Heads platform supported today, do this attack with an OS flashrom hook, simply because Heads doesn’t enforce booting the system with iomem=relaxed unless you signed /boot options yourself to permit that explicitely, with you having modified grub options and then having signed /boot hash digest content prior of launching this attack. ivy/sandy/haswell platforms? You could only flash firmwre withing Heads, thanks to PR0, and I will push the ecosystem to attempt to enforce the same thing on post skylake, though that’s a coreboot task (that I don’t understand why it was not investigated more and enforced the same way possible on pre-skylake, also invested a lot of time already on that, but not a priority for coreboot devels).

This is what is bothering me @TommyTran732, along interesting points, you also inject totally technically wrong information. Try this against any corebooted laptop, you simply cannot successfully do what you claim possible, which brings FUD and distrust from readers against security premises offered by coreboot if not wrongly configured. Even libreboot, which is not security oriented, could not permit firmware tampering is such way and is documented exactly the same. Search that up, and you will destroy that argument yourself. This is loss of my time.

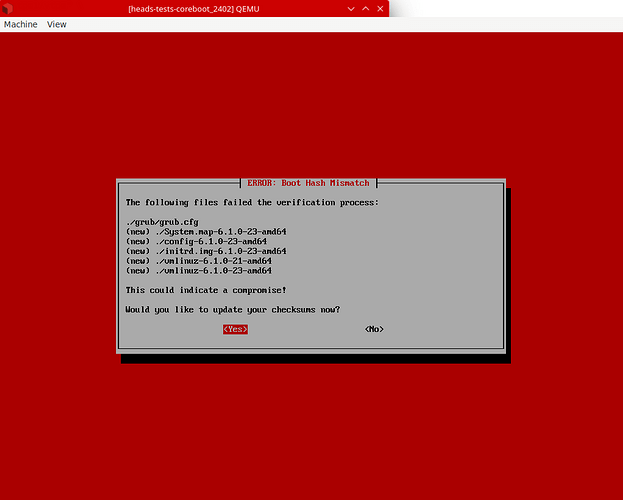

Then, let’s say you stupidly modified grub.cfg and priorly detached signed /boot digest permitting iomem=relaxed there. After flashing the firmware, unless perfectly crafted firmware image with my long past post points considered (that you haven’t addressed any counter point from it…), you missed the point of needed time and effort to tamper with not only bootblock, but also flashrom, other coreboot stages and the whole cryptography API of coreboot code to be successful flashing a completely valid ROM, reporting all right measurements to match exactly TCPA/TPM event log traces extending PCR2, then all Heads parts, including hardcoding all PCR details at Keys and passwords in Heads | Heads - Wiki. That’s the part i’m interested in outside of the theoritical bootblock part, which I would agree with you, if that was so simple, it would be dishonest and unethical, but the point is that it isn’t that simple. The fact that you bring invalidated theories is the dangerous fact here. How di you get not only on an unlocked computer, but got root/sudo credentials or even the TPM DUK key?!?! I don’t follow you here. This is exactly what security in depth is, this is exactly what your referred articles are, plus Purism pushes to use detached signed LUKS authentication with USB Security dongle, which your code snippet wouldn’t permit, unless again no screensaver, so basically talking about a dumb user here that volontarily deactivated all security measures enforced nowadays by default. Anyway, so you flash a firmware internally through a hook. Chances are you most probably didn’t crafted it perfectly for aforementioned reasons. On reboot, its not just about OS upgrade modifiying /boot content, but one single PCR not matching and preventing TPTM TOTP (firmware related sealed secret), which will not unseal TOTP, which cannot even be verified against phone, nor through reverse HOTP against supported HOTP USB Security dongles (same secret reversed challenged). So what are we talking about here? Let’s say you have access to the enrolled USB Security dongle. You would need to know both PINs here to reseal both HOTP and reseal TPM Disk Unlock Key, which also requires TPM ownership PIN but also the Disk Recovery Key passphrase defined at OS install for LUKS container decryption?? I don’t follow you, unless you crafted a perfectly valid ROM with hardcoded TPM extend measurements in both coreboot codebase and Heads codebase to match GPG keyring and trustdb at re-ownership time, and had access to SPI content backup. You would still have to type TPM unsealed LUKS Disk Unlock Key passphrase!!! TPM will fail unsealing both TPMTOP and TPM DUK. You could maybe pass TPMTOTP, but passing both and tampering things totally correctly is more then a feasible theoritical attack as of today, its barely a theoretical possibility, and that doesn’t justify Bootguard as an argument. Straw man argument solely.

Fleet? you just justified the only bootguard use case I agree with without counter-point. The end user is not even the owner of his machine here. And organization rents hardware: totally justified, while leaked keys still scary and blind faith related. But we already discussed that.

Well, /boot/firmware_update contains my last reproducibly built and flashed firmware image, that I can confirm today Heads CircleCI builds the same result as locally because reproducible builds resolved. That or a USB thumb drive zip file of last flashed version, if I left my laptop behind (I never do that, do you? So much depend on me I cannot afford that), then internally upgrading resolves uncertainty. But I get you don’t trust flashrom under Heads… But then you don’t trust PR0 either and any of the past granted work and collaboration and review of security experts either Nlnet past funded work placeholder for Authenticated Heads project (2022-ongoing) · Issue #1741 · linuxboot/heads · GitHub. I cannot argue against that. It’s again just faith related, just like you decide to trust bootguard instead. But I won’t fall again in that rabbit hole.

That was not the point. How are you bettering it?

And how do you trust your vendor has not had leaked keys? Faith. That is all my points. Anyway, that was my 2 cents. Can we stop looping?

OTP without transfer of ownership possibilities was proved wrong. That and closed source UEFI supply chain mess should be avoided at all cost, maybe less if fwupd supported, depending of track record. That’s it! My previous points on Dasharo is exactly the same. I just trust them more but I will never know if they leaked keys. I would choose to believe not, that’s all. And would accept the risk if I had to enforce/maintain firmware security for a fleet, way more then other manufacturers and the mess I’m aware of from firmware supply chain from ODM/OEM vendors which are bootguarded today. Them doing that change (still using proprietary tools to do so…) as the answer to your need of delegatign that trust of hardware RoT to someone, and they will anwer that call with Novacustom (coreboot+heads!). Again this toolset is available only through NDA and such, which is agai another total non-sense and pay wall etc, but is out of debate here. We are talking about oligarchy vs user control. There is no possible common grounds here. It’s either you delegate trust or you do the necessary to protect yourself. This is the world we live in. You can deny it or live with it. I choose the later.

I’m using GrapheneOS though, on a Pixel Phone. I gave up on controlling this and delegate the same trust you are talking about and I completey understand. But a phone is more volatile and the trust model is different. Have a lot to say against Google, but also a lot positive to say as well… I relatively trust that phone because of lack of other choices, thats another subject altogether. I need a phone. Replicant is not possible anymore with 3g having phased out. Trust anchors were even weaker there, work which could have improved it was abandoned. I stopped worrying, but have way less states to protect on that device than the one i’m developing on and where that work is needed by countless users which depends on QubesOS for security and Whonix for anonymity(no QubesOS is not privacy focused, that’s another topic: Whonix is. You go in all directions with questionable claims). I need to be trusted and did everything to be so. And now working toward shaking that OTP mess nobody should rely until transfer of ownership is possible, but understand need for fleet managed by a tier, where users don’t own anything in their devices anyway (NDA and invading contracts) and could, and probably are, monitored in all their actions anyway from corporate enforced security policies and legal discharges. Different topics, once again.