Maybe, but as far as I am aware there’s no fourth solution to the topic.

You are Pythia without priests

Almost like oracle without erp, yep.

Hi @all,

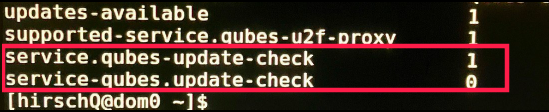

I want to use a script to clone some templates for DR and disable update-check for them. When I use qvm-features, it adds a second update-service entry instead of updating the existing one:

Via Qubes Manager it works as espected. Unchecking the box in qube settings service tab removes the value for the update service.

I tried some workarounds, but with no success ![]() Any ideas on this?

Any ideas on this?

qvm-features QUBE service.qubes-update-check 0

qvm-features QUBE service.qubes-update-check ' '

qvm-features QUBE service.qubes-update-check --delete << it removes the duplicate, but not the original

qvm-features QUBE service.qubes-update-check --unset

My system: 5.15.94-qubes.fc32.x86_64

Thx & Regards,

hirschQ

Looks like a typo when running the qvm-features command.

In your screenshot @hirschQ you can see two distinct flags:

-

service.qubes-update-check(dot aftter the first word) -

service-qubes.update-check(dot after the second word).

Next step is removing the meaningless flag and updating the other one again (this time with the correct name). ![]()

Trying to figure out why I never receive an update notification unless a template is actually running (my workaround being to clone commonly used templates to what I call an update canary, and run that, with minimal memory), I saw this, and it’s one I can answer.

If you really want to just exclude one qube from updating, then you can create a script to do this, a bit more elaborate than was suggested. Have it run qvm-ls with whatever option gives you just a one-column list of qubes, set an array variable in your shellscript to the results of that, and loop. Inside the loop, check for the name of the template NOT being the name of your windows template. If it is not the same, go ahead and update.

I was just wondering if there was any existing facility about this.

Yes, scripting is always an option. It just confirms the usability problem.

Should we suggest this on GitHub?

In 4.2, the templates are updated via the dom0 command qubes-vm-update.

There is a --skip option available. (https://github.com/QubesOS/qubes-core-admin-linux/blob/main/vmupdate/vmupdate.py#L45)

e.g.

[user@dom0 ~]$ qubes-vm-update --templates --skip win7

This skip option is not (yet) implemented in the GUI tool.

“Update checks” are done via a systemd timer.

[user@some_qube]$ cat /usr/lib/systemd/system/qubes-update-check.timer

[Unit]

Description=Periodically check for updates

ConditionPathExists=/var/run/qubes-service/qubes-update-check

[Timer]

OnBootSec=5min

OnUnitActiveSec=2d

[Install]

WantedBy=multi-user.target

You can check the status of the timer with:

[user@some_qube ~]$ systemctl list-timers --all

So, Qubes will check if an update is available when the timer has been active for 2 days.

If you suspend your computer, the timer will not be active.

According of how much you use suspend, the time between two update check could be 4 or even 5 days.

I found this was already discussed, I think

That matches the behavior on my system…so I am pretty confident the timers are there, etc. The one thing that seems off is that I have to be running the template itself for it to work. (And I’ve gotten updates to show up sooner by restarting the template, because it does an immediate check then.) I remember back in the past I could start an AppVM and soon afterwards, I’d be told the template needed updating.

(So now I leave a bunch of templates–with minimal ram assigned–running full time.

In order not to run my real templates and thus possibly expose them to something-or-other, I clone some of them such as my basic one I derive all others from, and run the clones–when the clone needs an update, the original–and anything else I clone off of it–does too.)

Running a dispvm whose dvm template is based on the template doesn’t cause updates to show; the template itself must be running. That’s what I’m trying to figure out. I wasn’t clear, my apologies.

I do know the update check service is enabled on my VMs.

It has just occurred to me that perhaps I’m seeing this because I am running disposables rather than “regular” AppVMs. (In other words: perhaps AppVMs cause an update check to be made, but disposables do not.)

Another possibility is that using the cacher might have something to do with it, though I don’t know what. Also salt may or may not have an effect.

The service is explicitly enabled on my VMs, yet when I run them, I do not get notification that the template needs to be updated. In spite of everything said here, which indicates it should work.

As I said to szz9pza, I just realized that perhaps the problem is that most of the time I am running a disposable, not a regular AppVM. If the check service just asks about a VM’s template, it will check the Dispvm Template for updates, not the “real” template.

I always update manually via CLI.

Benefits:

- simplicity

- no automatic connections to various hosts

- no traffic waste

I take it you mean you do an update whether there’s an indication that a popup is needed, or not. If so that will certainly work though it takes up more bandwidth.

If that’s not what you mean, you missed the point; that being I am not getting the notifications unless actually running templates.

I take it you mean you do an update whether there’s an indication that a popup is needed, or not.

All automatic checks for updates are disabled, so I don’t expect indications. Only if something special is done in a qube (e.g. trying to install a package), it sometimes shows the tray icon notification that there are updates for that template. I don’t know why this happens. It doesn’t bother me.

though it takes up more bandwidth.

How exactly?

I am not getting the notifications unless actually running templates.

Are they enabled? (Qubes Global Settings → Enable All + checkboxes)

Last first. yes, they are enabled. The timer that szz alluded to is running. Otherwise running my templates (or rather clones thereof) wouldn’t work at all.

It’s more bandwidth because every time you do this you run updater on every single template whether it needs it or not. Whereas the usual route only checks for updates on templates whose appvms are run. If you have very few templates there’s little difference, but I have dozens of them.

I try to manage this by cloning my “sys-base” minimal template (the one upon which all other templates are based) and simply running the clone with minimal memory. When it needs an update, all templates (other than windows 7) need to be updated. Other templates were originally cloned from sys-base (such as the fancier one for applications, app-base), and some of them have a lot of descendants…I clone those too and run the clones. When the clone reports an update is needed, I update the original and all descendants. All told I have six clones running, three of them don’t have descendants but do touch the internet so I want to keep them updated. In fact, Brave Nightly often needs updates, but it’s just the brave nightly qube. Most of my calls to update for for that one qube. (Needless to say I use the updater gui to update the clone at the same time.)

So I am trading having to run about 1.5 GB of otherwise useless qubes, versus gratuitously updating whether it’s needed or not. I’m on 64 GB system with good connectivity (so 1.5GB is a small price) and also on a 32 GB system with crappy connectivity (so 1.5GB even though a bit steeper, is worth it not to have to run lots of updates).

It’s more bandwidth because every time you do this you run updater on every single template whether it needs it or not. Whereas the usual route only checks for updates on templates whose appvms are run. If you have very few templates there’s little difference, but I have dozens of them.

The question is what checks means and how frequently that happens in each scenario (manual/automatic). If the automatic updater checks 5 min after each reboot (when you also run AppVMs), that will surely use more traffic as it is more frequent than doing it manually, e.g, once a week. Additionally, I don’t think it is necessary to notify remote hosts each time I reboot.

Yes, in my setup, they will all be updated, if updates are available. If there are no updates, only the “check” will use traffic, once a week. It seems the most traffic consuming check is that of dom0. One can skip it and/or update manually individual templates.

Hence my conclusions about the benefits of manual updates. I don’t insist that I am right though. It is just something that works for me.

Related:

Might be useful too (still not tested personally):

I have cacher, but I have no idea if it actually makes a difference. I can’t prove that it’s actually caching though I do know it’s part of the “chain” of VMs that make up my clearnet updating.

I didn’t realize you were only doing a “global” update every week. Recall that the ONLY updates being checked for on my system (because it’s broken) are six templates (though some of them mean everything or almost everything must be updated)., Those get checked when I start them…then every two days after that. Thus my laptop which I shut down at least once a day is likely to know about an update before my desktop (which I leave running). I just restart the relevant clone and a few minutes later it says "update me’ and I do so (and update the affected real template(s) as well.

I don’t use a lot of comm bandwidth doing it this way; I do have a bunch of otherwise-extraneous VMs running though. But they work fine on 400MB apiece.

One disadvantage of having it trigger off AppVMs running is that–for instance–if a browser qube says it needs updating…does that apply to ALL of my templates or just the ones where that browser is installed? There’s no way to tell. With my clones (which I call Update Canaries), I’m liable to find out how far back in the tree the update actually is. If sys-base’s canary needs an update, I gotta do them all. If it’s just firefox, then about five VMs will need updates. If it’s brave nightly (the most frequent one) then only one VM does.

Your setup is too complicated for my small brains ![]()

Part of it comes from the way I do minimal qubes which is because I learned from Sven, who would start with debian-11 (at the time), clone it, add stuff, clone again, add more stuff. You end up with a “family tree” of templates that way. For what we’re talking about here the relevant point is that if something that came from that first template needs updating…they all need it. If it’s something just on that template, then only that template needs it. The canaries at least give me an idea of what has to be updated when one of them needs to be updated.