I have a use case that may not be the same as OP’s.

I have a file server, attached to a specific VM, where I archive stuff that comes from the Web. For obvious reasons, since the file server has more than just that class of file, I don’t want to mount the file server on the Web browsing VM (otherwise total compromise of the Web browsing VM means full access to the file server’s whole contents). Thus the file server is attached to that specific VM in question, which I’ll call the media server VM.

So what I’m doing now is mounting on my browsing VM a subfolder of the media server VM. That way I can directly save archival material into that subfolder.

Prior to this setup, I was suffering through saving locally on the VM, then having to have these long-ass sessions where I organize the files by (1) qvm-copying them from Web VM to media server VM (2) drag-and-drop copying them from media server VM to file server. This was exhausting and completely discouraged actually organizing the files.

Now I can just use the file chooser on my Web browser to select the exact folder I want to drop my archival download in. All archival materials end up in their final destination — their corresponding folders on my NAS machine.

HUGE difference in terms of usability.

Is this riskier than copying files manually?

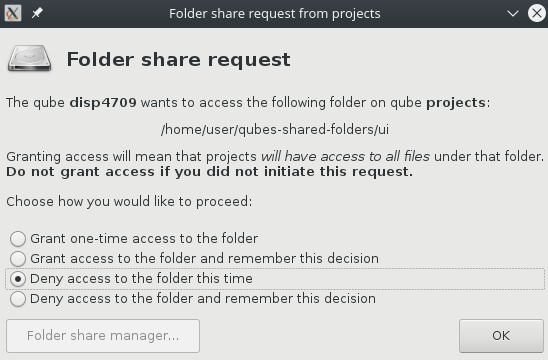

Yes, I’d argue it is — right now there is no policy mechanism to prevent the Web VM from mounting “the wrong folder”, although in the future there will be. Furthermore, since we’re talking about complex protocols between VMs, there is always a possibility of a bug being exploited. The same is true for SAMBA and NFS setups that involve qubes.ConnectTCP by the way, the exact same way — my solution is just far less complex in both terms of code and configuration than something like SAMBA or NFS.

Is the current tradeoff worth it?

For me, it is. I can do something I could not do before, much more quickly, and with adequate risk tolerance (which will get better once the shared folders system asks for authorization to export a specific folder).